Create with AI

About this case study

This case study goes over how user activation could increase if I leverage AI to generate or import quiz content.

About Socrative

Socrative has a long history of being one of the most simplest, but powerful freemium quizzing apps that teachers trust. Socrative was founded in 2010 and acquired by Showbie in 2018. I joined the team in 2020 and have been gradually replacing legacy UI with modernized design.

Responsibilities

Leading a workshop

Defining poblem

Competitive research

Define product requirements

User flows

Wireframing

Prototyping

Define success metrics

Problems to solve

Finding and creating quizzes takes significant commitment, because it requires you to find lesson content, creatively build questions, and fill in numerous input fields that make up all your quiz questions.

Creating your first quiz requires you to enter a handful of inputs to create a question.

Initial research

Quantitative data shows a 2.2x higher activation rate when users discover new quizzes from our free quiz library or import content from another source.

Our free quiz library allows you to explore new questions, but AI would make it much easier to find relevant questions based off your specific needs.

We also discovered through studies and surveys that “planning and preparation” is the 2nd most time-consuming part of teachers day, where they spend 20-30% of their time, so generating quizzes would be a powerful way to save time.

Leading a workshop

This project is a new area for our team to explore, so I prepared us by conducting a two day workshop that lead product management and development through activities that help us understand the user and discover solutions:

Hopes & Fears: This activity gathers a group's attitude towards a project. Hopes uncover expectations about what can be done. Fears uncover doubts about the project at hand. We found that we need to explore AI technology to ensure scope creep can be kept in check, such as engineering and testing our backend prompt to increase the quality of AI’s output.

Empathy mapping: This activity helps identify the thoughts and feelings of our user when they’re building their library with or without AI. The meaningful insights found are the importance of content accuracy and relevance, making the AI easy to use for first time users, and gathering content suitable for every learning level and standard.

Value assessment: We cited the strengths and problems with the current ways to build your library in Socrative. Finding relevant content quickly and importing your existing content was by far the most difficult problems that AI could solve.

How-might-we: Constructing how-might-we questions generates creative solutions while keeping teams focused on the right problems to solve. I equipped the team with a format that helps them frame the problem into a question: “How might we improve [what] so that the [who] can [why] during [when]?” This took everything we learned from the previous activities and challenged us to define a concise how-might-we question. What we came up with is “How might we make quiz creation process fast and effective, so that new and existing users can get valuable quiz content during onboarding and class preparation.”

Sketch and present solutions: We used our problem statement to describe or draw our solutions. A few notable ideas: reading your existing Socrative quizzes to create new quizzes, onboarding flows that give users an option to generate right after signup, and aligning generated content to a quiz standard.

Screenshot of the whiteboard I created for our workshop.

LLM (Large Language Model) experiment

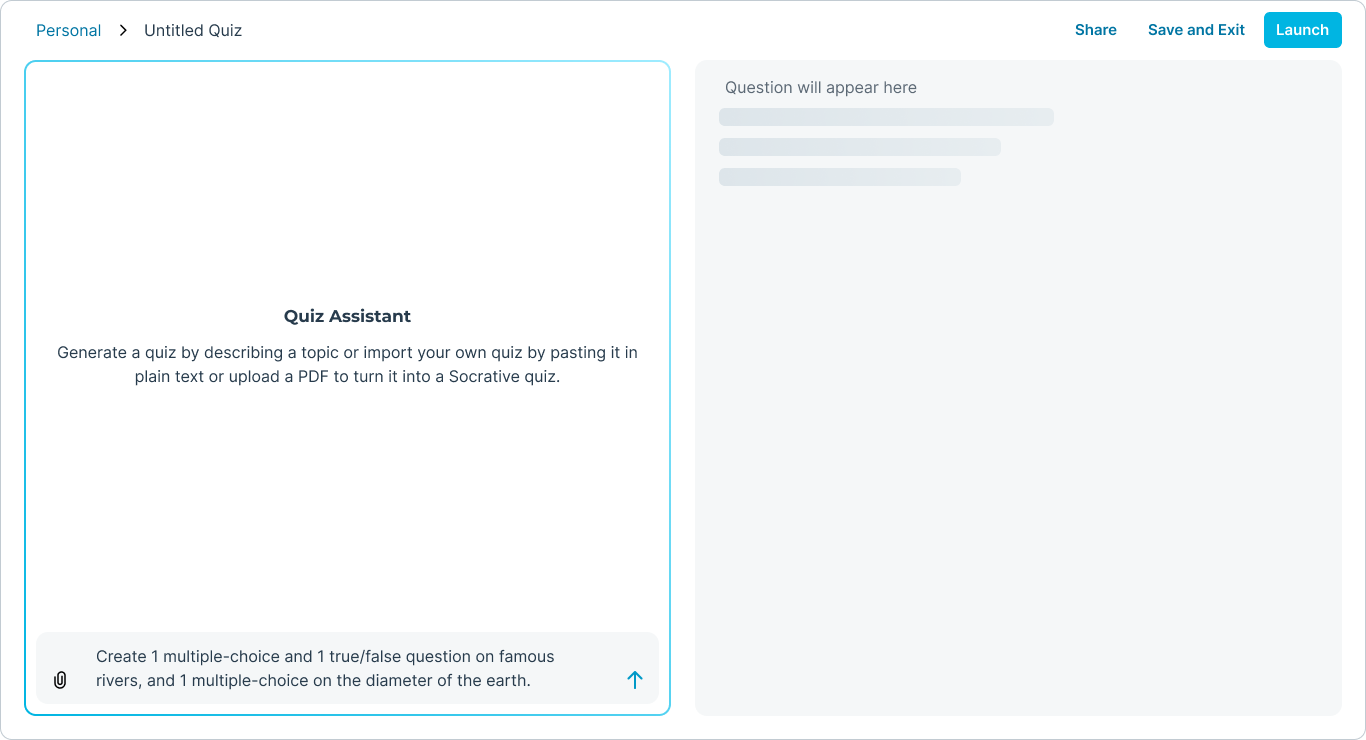

The team needed to understand if we should use a simple form to create a specific output or a conversational approach with an LLM. I created a prototype that demonstrated how an LLM could ask questions to gather information before creating a quiz.

AI is a new technology and users may not be confident prompt writers, so a conversation may guide users through using AI.

I created a customGPT called “quiz builder” that would ask users a few short questions and output the questions into our app.

I created a presentation to share the conclusion that LLM’s are great at guiding users or great at generating questions, but was inconsistent when doing both.

A mockup showing the way the LLM could appear in Socrative.

Defining design requirements

By the end of the workshop we had a strong understanding of the user, a handful of initial solutions, and defined the problem to solve. This gave me enough to create product requirements that paint a clear vision, and facilitate discussion on what to include in scope. The design requirements are:

A consistent UI for every way we allow them to generate.

Relevant content should be found in as few steps as possible.

Guide users with detailed instructions on how to use AI.

Be transparent about how we use their information.

Inform users of their responsibility to validate the accuracy of generated content.

Competitor research

I explored and documented several quizzing apps on a whiteboard (Miro) to understand how AI is used. This served as inspiration and gave clues of what to expect as we explored a solution to our specific problem. I found that many of the competitor products had some strong similarities to learn from, like staging questions for users to review before adding high quality questions to their quiz. However, all of them only generated multiple-choice questions.

We decided to support more than multiple-choice, and include True/False and Short answer as a way to differentiate us from competitors.

Wireframing and user flow

Simple wireframes were drawn to explore the functionality and user flow. The wireframes were presented and discussed with designers, developers, and product managers to solidify a direction before going into higher fidelity work.

The wireframes made sure to keep AI accessible from anywhere in the quiz creation process so users can access it to create an entire quiz from scratch or just add a question to an existing quiz.

I also surfaced the AI actions inline with the usual way you add a new quiz or question, so that it’s streamlined with the way you already use Socrative.

Design discovery

Multiple drafts of high-fidelity designs were explored with the product team and the wider company. We discussed details like how many questions will it generate by default, how will you adjust question difficulty, the correct loading state, what question types can be generated and should they all contain correct answers.

Once we felt satisfied, I packaged and captioned the mockups with a hand off presentation and instructions for development.

The above is a screenshot of one of many figma pages

Drawer prototype

I created a figma prototype to of the main flow for generating questions so my team could get an impression of a working solution. The drawer made it easy to discover new questions, and see your existing questions at any step of your quiz creation process.

Screenshot of the prototype with the “Generate Questions” drawer open. Two questions have been added from the drawer and appear in the quiz that is left of the drawer.

Video walkthrough

This recording goes through the complete build

Results

When comparing activation between our free quiz library and AI, the free quiz library only saw a total of 14 out of 68 users activating, while AI users saw 130 out of 557.

AI generated questions have become 25% of all created questions on Socrative.

The conversion rate for users who interacted with AI is 5x (YoY) higher than the average. Overall the conversion rate has increased by 30% YoY since the release of Socrative AI (February 12 - April 08).

Contact me to see more of the process and progress of Socrative.